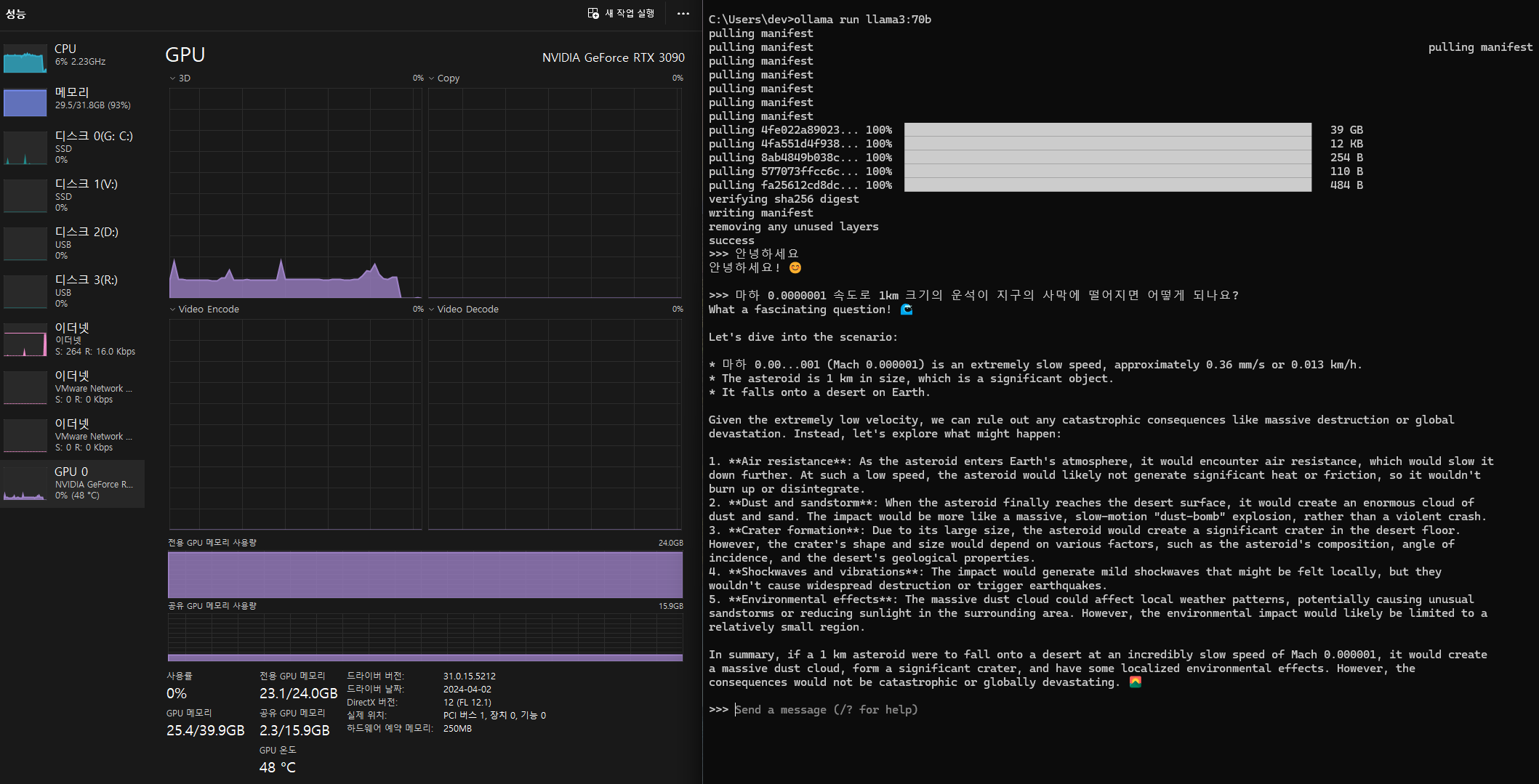

“I tried running Meta’s latest open-source LLM model, Meta-Llama-3-70B. Even using the full DDR6X 24GB RAM of the RTX 3090FE and the system’s DDR5 32GB RAM, it operates very slowly. I think the 70B model might need more RAM.”

Meta Llama 3 8B model run. SPEED GOOD